Andrew Welch

Published , updated · 5 min read · RSS Feed

Please consider 🎗 sponsoring me 🎗 to keep writing articles like this.

Dock Life: Using Docker for All The Things!

Embracing Docker for All The Things gives you a more flexible, robust, and transportable way to use tools on your computer without messy setup

Docker is a devops tool that some people find intimidating because there is much to learn in order to build things with it.

And while that’s true, it’s actually quite simple to start using Docker for some very practical and useful things, by leveraging what other people have created.

While writing an iPhone app might be complex, using one isn’t. Same with Docker!

In this article, we’re going to talk a bit about the philosophy behind using Docker for “All The Things”, and show a number of practical examples you can start with today.

All you will need to have is Docker Desktop installed on your computer to start using it immediately for some very useful things.

So let’s get going!

We don’t install node. We don’t install composer. We don’t install a whole host of things that you might be used to using every day.

But with a little magic, you’ll never notice the difference.

You still will just type npm install <package> or composer install <package> and everything will just work.

Link Why bother?

If everything is going to work the same, why should we bother using Docker?

There are a number of advantages to the “containerized” approach that Docker uses:

- Docker images are disposable. If something goes wrong, you throw them away, without them ever affecting your actual computer

- You can run specific versions. You’re not locked into the version of the tool you have installed on your computer, you can spin up any version of the tool you need

- Switching to a new computer is easy. You don’t have to spend hours meticulously reconfiguring your shiny new MacBook Pro with all the interconnected tools & packages you need

- You can experiment with aplomb. If you’re curious about a new tool or technology, you can just give it a whirl. If it doesn’t work out, just discard the image

- Docker images are self-contained. You’re not going to have to scramble to download a set of interconnected dependencies in order to get them to work

…and there are many others, too.

Link Docker images & containers

Instead of installing all of the tools & packages you’re used to using, we use Docker images that someone else has created that contain these tools & packages.

Think of a Docker image as a bundle of all of the files, executables, etc. needed to run some tool we want to use.

There is a central registry called Docker Hub where organizations and people can publish their Docker images, and we get to use them!

Images are used as a factory to spin up a Docker container, which is just a running instance based on that image.

These running Docker containers are what do the actual work, and they are where the term “containerization” comes from.

The first time you run a Docker container, it will download the image. From then on, it’ll just run the container, do the work, then exit.

Link Shell Aliases

In order to seamlessly provide access to various tools run via Docker, we’re going to use shell aliases.

A shell is just the program that provides the command-line interface in your terminal.

Shell aliases are user-defined commands that expand into something else when you type them into your terminal.

Think of aliases as fancy macros

echo "$SHELL"

Either way, both shells allow you to create shell aliases, which is what we’re after.

For zsh, we’ll be putting our aliases in the ~/.zshrc file. For bash, we’ll be putting our aliases in ~/.bashrc

The ~/ prefix means “in my home directory”, and for the curious, rc stands for “run commands”.

Both files are literally just a list of commands that are run when the shell is started up, and we can leverage that to add our aliases in.

After you change something in one of the rc files (such as to add an alias), you need to re-run the commands in the file to execute them:

For zsh: source ~/.zshrc

For bash: source ~/.bashrc

For more on aliases, check out the How to Create Bash Aliases and How to Configure and use Aliases in Zsh articles.

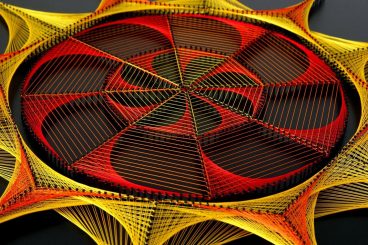

Link Anatomy of a Docker Alias

While you don’t need to fully grok the Docker aliases we describe here to use them, let’s have a quick look at the components in the aliases we’re using.

Here’s a breakdown of an example alias we might use to run node in a Docker container:

Anatomy of a Docker Alias

- alias — tells our shell we’re defining an alias

- node — the name of our alias (what we type in terminal to expand the alias)

- docker — the Docker CLI command

- run — tells Docker to run the container

- --rm — automatically remove the container when it exits

- -it — create an interactive Bash shell in the container, to let us potentially type further input

- -v "$PWD":/app — mount a volume from the current directory (output by $PWD) to the /app directory in the container. This lets the container read & write to the current directory on our computer. We put quotes around it to handle file paths with spaces in them properly

- -w /app — set the working directory to /app in the container

- node:16-alpine — use the node Docker image with the tag 16-alpine to create the container

Again, this doesn’t all need to make sense to you immediately, you can still use the aliases listed below.

But it’s here for you if you decide to modify the aliases, or create your own.

Now let’s get on to the aliases we actually used to run various Docker images!

Link Composer alias

Composer is a package manager for PHP, to run it via Docker container, just add this alias to the rc file for your shell:

alias composer='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer '

Then source your rc file (see above) to reload it, and you’ll have composer available globally in your terminal, without ever having installed it.

What it does is it runs the official composer Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then passes the composer commands into the container to run them.

It also creates a second shared volume for Composer’s cache mounted to /tmp on your computer, so it can persist.

So you can do things like:

composer create-project craftcms/cms craft-test

But what if for some reason we need to run the older composer 1.x? No problem, we can set up an alias for that too:

alias composer1='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer:1 '

Now if we type composer1 in our terminal, it’ll use Composer v1.x.

In the composer:1 in the alias above, composer is the name of the Docker Hub image, and 1 is the tag, which lets you specify which version of the image you want to use.

alias node='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine '

Then source your rc file (see above) to reload it, and you’ll have node available globally in your terminal, without ever having installed it.

What it does is it runs the official node Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then passes the node commands into the container to run them.

Then you can do things like:

node npm install

This uses Node 16 by default (as indicated by the 16-alpine tag), but what if you wanted to be able to run older versions of Node? No problem, add these aliases:

alias node14='docker run --rm -it -v "$PWD":/app -w /app node:14-alpine '

alias node12='docker run --rm -it -v "$PWD":/app -w /app node:12-alpine '

alias node10='docker run --rm -it -v "$PWD":/app -w /app node:10-alpine '

Then you can instantly run commands using any version of node by using node14, node12, and node10 respectively!

alias npm='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine npm '

Then source your rc file (see above) to reload it, and you’ll have npm available globally in your terminal, without ever having installed it.

Note that if we make the alias npm here, we can’t use node npm anymore, because it will expand both the node and the npm aliases. Either then always use npm on its own, or rename the alias to npmx or something else.

What it does is it runs the same official node Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then runs npm and also passes your npm commands into the container to run them.

Then you can do things like:

npm install

alias deno='docker run --rm -it -v "$PWD":/app -w /app denoland/deno '

Then source your rc file (see above) to reload it, and you’ll have deno available globally in your terminal, without ever having installed it.

What it does is it runs the official deno Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then passes the deno commands into the container to run them.

Then you can do things like:

deno run /app/main.ts

Link AWS Alias

The AWS Command Line Interface allows you to manage your AWS services from your terminal. To run it via Docker container, just add this alias to the rc file for your shell:

alias aws='docker run --rm -it -v ~/.aws:/root/.aws amazon/aws-cli '

Then source your rc file (see above) to reload it, and you’ll have aws available globally in your terminal, without ever having installed it.

What it does is it runs the official aws-cli Docker container, mounts the hidden ~/.aws directory your home directory as a shared volume so the Docker container can write to it, and then passes the aws commands into the container to run them.

So you can do things like:

aws ec2 describe-instances

alias ffmpeg='docker run --rm -it -v "$PWD":/app -w /app jrottenberg/ffmpeg '

Then source your rc file (see above) to reload it, and you’ll have ffmpeg available globally in your terminal, without ever having installed it.

What it does is it runs the official ffmpeg Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then passes the ffmpeg commands into the container to run them.

So you can do things like:

ffmpeg -i input.mp4 output.avi

alias yo='docker run --rm -it -v "$PWD":/app nystudio107/node-yeoman:16-alpine '

Then source your rc file (see above) to reload it, and you’ll have yo available globally in your terminal, without ever having installed it.

What it does is it runs a yeoman Docker container, mounts the current directory as a shared volume so the Docker container can write to it, and then passes the yo commands into the container to run them.

So you can do things like:

yo webapp

Link Tree Alias with parameters

tree is a CLI tool that allows you to view the hierarchical tree structure of a directory. In this case, we want to be able to pass CLI parameters down to the tree command, so we can tell it how deep of a file system tree we want to see.

In order to do this, we can set an alias as a function, to obtain the CLI parameters, and pass them into the tree command inside of the Docker container.

To run it via Docker container, just add this alias to the rc file for your shell:

alias tree='f(){ docker run --rm -it -v "$PWD":/app johnfmorton/tree-cli tree "$@"; unset -f f; }; f'

Then source your rc file (see above) to reload it, and you’ll have tree available globally in your terminal, without ever having installed it.

What it does is it runs a tree Docker container created by John Morton, mounts the current directory as a shared volume so the Docker container can read it, and then passes the tree command along with any parameters into the container to run them.

We have to treat parameters (anything proceeded with a -) special and explicitly extract them, or they are not passed into the Docker container.

So you can do things like:

tree

✔

/~webdev

├── boilerplate

├── contrib

├── craft

├── generator-craftplugin

├── go

├── misc

├── node

├── rust

├── sites

└── twig

directory: 10

And we can also pass in CLI parameters to tell it we want to see 2 levels deep:

tree -l 2

✔

/~webdev

├── boilerplate

| ├── craft

| ├── craft-plugin-buildchain

| ├── craft-plugin-vite-buildchain

| ├── craft-vite-buildchain

| ├── plugindev

| └── vitepress-starter

├── contrib

| ├── awesome-vite

| ├── cms

| ├── craft-autocomplete

| ├── craft-sprig

| ├── craft-sprig-core

| ├── docker

| ├── europa-museum

| ├── idea-php-symfony2-plugin

| ├── minify-lib

| ├── my-vanila-app

| ├── rackspace

| ├── spoke-and-chain

| ├── vite

| ├── vite-plugin-favicon

| ├── vite-symlink-issue

| ├── vitepress

| └── vizy

├── craft

...

directory: 121 file: 5

Link Making your own Docker Aliases

Hopefully, you can see a pattern emerging in terms of how to construct an alias that runs a Docker container for you, so you can create your own, too!

A typical pattern for me is going to Docker Hub, searching for the tool I’m interested in, and then creating a shell alias for it… and away we go!

Usually, the Docker Images will have example usage commands listed along with them that makes it pretty painless to do.

Link Making your own Docker Images

Making your own Docker images is a bit of a leap from just using images that others have created, and is beyond the scope of this article.

In order to build Docker images yourself, it will require learning a bit more about the ins & outs of Docker, and how it works.

I can highly recommend the Mastering Docker video series from Bret “The Captain” Fisher if your interest is piqued.

You can also look at the nystudio107/docker-yeoman repository for a simple Dockerfile example, with GitHub actions that build & push the images to Docker Hub.

Link Tying Off at the Dock

There’s a reason why Docker has such a strong following in the devops and enterprise worlds, but we can dip our toe gently into the water, and still reap the benefits.

If you want all of the aliases listed above in one place for a single copy & paste, here they are:

# Docker aliases

alias composer='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer '

alias composer1='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer:1 '

alias node='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine '

alias node14='docker run --rm -it -v "$PWD":/app -w /app node:14-alpine '

alias node12='docker run --rm -it -v "$PWD":/app -w /app node:12-alpine '

alias node10='docker run --rm -it -v "$PWD":/app -w /app node:10-alpine '

alias npm='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine npm '

alias deno='docker run --rm -it -v "$PWD":/app -w /app denoland/deno '

alias aws='docker run --rm -it -v ~/.aws:/root/.aws amazon/aws-cli '

alias ffmpeg='docker run --rm -it -v "$PWD":/app -w /app jrottenberg/ffmpeg '

alias yo='docker run --rm -it -v "$PWD":/app nystudio107/node-yeoman:16-alpine '

alias tree='f(){ docker run --rm -it -v "$PWD":/app johnfmorton/tree-cli tree "$@"; unset -f f; }; f'

Incidentally, when you do move to a new computer, all you have to do is copy your aliases over, and all of your tools are now “installed”. 🎉

If this has given you a taste for Docker, check out the An Annotated Docker Config for Frontend Web Development & Running Node.js in Docker for local development articles.

If you want to use your shell aliases in a Makefile, check out the SHELL ALIASES IN MAKEFILES section of the Using Make & Makefiles to Automate your Frontend Workflow article!

Don’t worry, the global aliases you’ve defined here won’t override the local aliases you’ve set up in your Makefiles.

Thanks to Matt Gray for sharing some of his favorite Docker aliases!

Happy Dockerizing!